Build or Buy Your Custom AI Agent: A Practical Guide to the Right Choice

In this article you'll get a clear framework to decide whether to buy an off-the-shelf AI agent or invest in a custom build. You'll learn the technical, legal, cost, and operational signals that point toward building, plus concrete steps and checklists to follow so your decision reduces risk and speeds value.

What Is an AI Agent (and Why It Matters)

An AI agent is software that perceives its environment, makes decisions, and acts to achieve goals — from chatbots that answer customers to autonomous workflows that orchestrate systems for you. The concept is well established in AI literature and practice (see the definition of an intelligent agent ). Why it matters to you: an agent is not just a model — it’s the combination of models, data, integrations, and guardrails that make automated work happen reliably in production.

Off-the-Shelf Solutions: When They Win

Off-the-shelf AI platforms let you prototype fast and rely on vendor-managed models and infrastructure. Representative choices include OpenAI’s API overview , Microsoft Azure OpenAI service , and Google Vertex AI .

Advantages of buying:

Rapid prototyping and time-to-value using managed models and tooling.

Lower upfront infrastructure and platform overhead.

Vendor responsibility for model updates, scaling, and some compliance features.

Advantage | Description | Impact on Time-to-Market |

|---|---|---|

Rapid prototyping | Using managed models and tools enables fast iteration and testing. | Significantly accelerates launch |

Lower upfront overhead | Minimal initial infrastructure and platform setup required. | Shortens setup and deployment |

Vendor responsibility | Vendor manages updates, scaling, and some compliance needs. | Reduces operational delays |

When you should prefer buy:

Your needs are well served by general LLM capabilities (summarization, Q&A, drafting).

You need a quick pilot or a proof-of-concept to validate business value.

You lack internal ML/DevOps resources and prefer predictable run-books.

Why Build a Custom AI Agent

Custom agents matter when the off-the-shelf agent cannot meet your constraints. Typical reasons to build include:

Tight regulatory or legal requirements that demand end-to-end control of data handling (examples: HIPAA for health data and GDPR for personal data). See HHS on HIPAA and an overview of GDPR .

Deep integration with proprietary systems or specialized workflows where off-the-shelf connectors are insufficient.

Complex multi-step automation (agents that call tools, query private databases, take actions in multiple systems) that requires custom orchestration and error handling.

High-stakes use where fairness, auditability, and human-in-loop controls are required beyond vendor defaults.

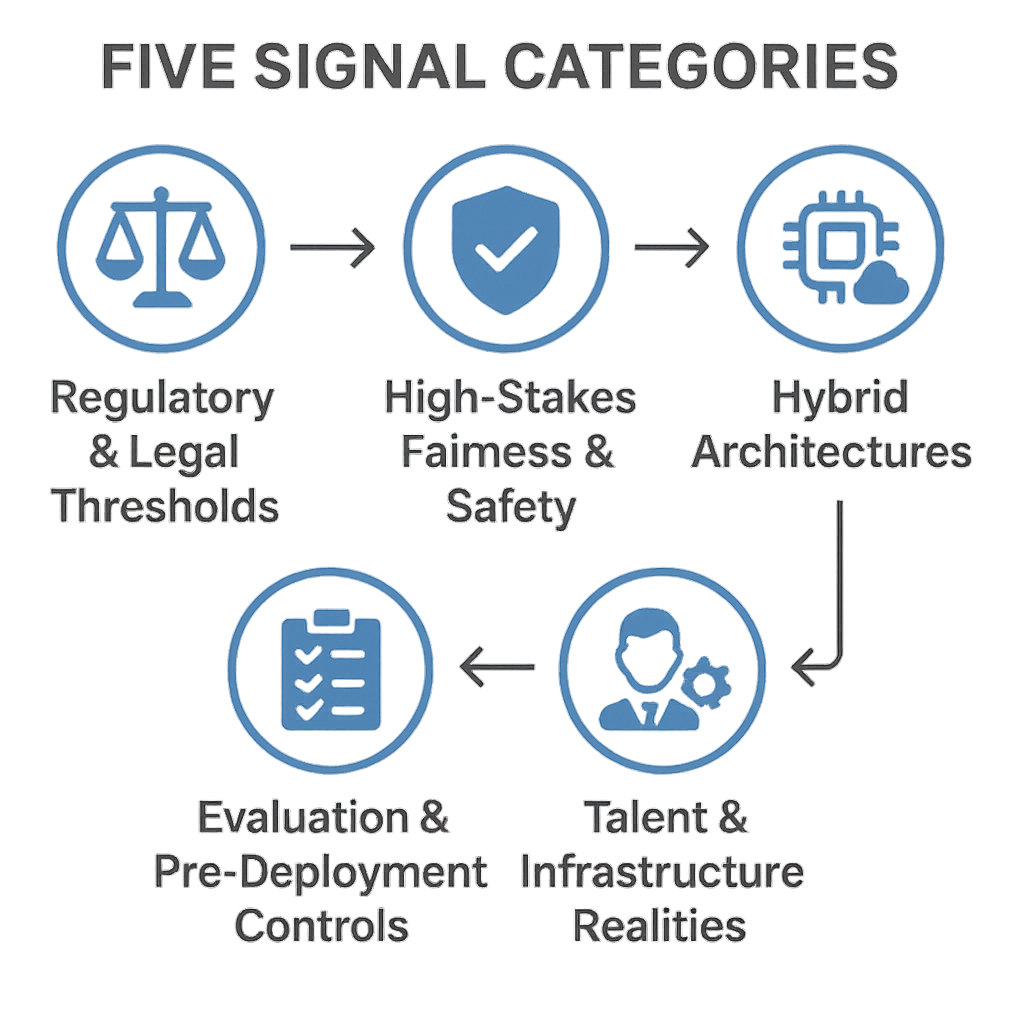

Signals That You Should Build (Practical Thresholds)

Regulatory and Legal Thresholds

If your use involves regulated personal health information (PHI) under HIPAA, or you must demonstrate GDPR lawful basis, access controls, and data deletion guarantees, a custom build (or a tightly controlled private deployment) is often necessary so you can design compliance into the architecture. See HHS on HIPAA and the GDPR overview .

High-Stakes Fairness, Safety, and Audit Requirements

If outcomes affect lives, finances, or legal status, you will likely need fairness evaluations, human-in-loop controls, and traceable decision logs that generic agents don't provide by default. Industry toolkits and frameworks show how to operationalize fairness and transparency.

Evaluation and Pre-Deployment Controls You Can’t Skip

Use specialized evaluation frameworks (OpenAI Evals and NIST’s AI Risk Management guidance) to benchmark agent behavior against production risks before you deploy. These evals surface problems that only show up under realistic prompts and data.

Hybrid Architectures for Cross-Functional Workflows

When the best approach is a hybrid—vendor models for general language understanding plus bespoke orchestration, private retrieval-augmented components, and workflow logic—you can often realize major cycle-time improvements in cross-team processes. Industry case collections from automation vendors illustrate significant reductions in processing time when RPA and AI are combined; these are practical precedents for hybrid builds.

Talent and Infrastructure Realities

If you already have ML engineers, MLOps, and cloud architects, you can realistically absorb a custom build. If not, managed platforms (AWS, Azure, GCP) dramatically shorten timelines because they handle model hosting, scaling, and parts of compliance. Compare managed platform offerings like AWS Bedrock .

How to Evaluate a Custom Agent Before You Commit

Follow this short checklist to stress-test the build decision:

Problem definition: Is the need clearly unique or differentiating? (Document the gap vs. vendor features.)

Data and integration mapping: Can you access, clean, and secure the data the agent must use?

Compliance gate: Does law or policy require controls vendors cannot provide?

Talent audit: Do you have ML, DevOps, and security skills in-house or via partners?

Cost/time estimate: What’s the projected MVP time-to-production and run rate?

Pilot and eval plan: Define metrics, failure modes, and evaluation datasets up front.

Checklist Item | Description |

|---|---|

Problem definition | Is the need clearly unique or differentiating? (Document the gap vs. vendor features.) |

Data and integration mapping | Can you access, clean, and secure the data the agent must use? |

Compliance gate | Does law or policy require controls vendors cannot provide? |

Talent audit | Do you have ML, DevOps, and security skills in-house or via partners? |

Cost/time estimate | What’s the projected MVP time-to-production and run rate? |

Pilot and eval plan | Define metrics, failure modes, and evaluation datasets up front. |

Architecture Patterns and the Hybrid Option

You don’t have to choose strictly one or the other. Common, effective patterns include:

Lightweight hybrid: Vendor LLM for general language plus private vector store and retrieval to limit data exposure.

Orchestration-first: A custom controller routes tasks to vendor models, internal microservices, or human approvers as needed.

Edge/private models: Sensitive inference happens inside your VPC or private cloud while non-sensitive tasks use vendor APIs.

Hybrid patterns are widely used in production and documented in vendor case studies and system design write-ups.

Cost and Resource Implications (Realistic View)

Time to prototype: Managed APIs and platform tools can get prototypes running in days or weeks; building a production-grade custom agent with secure integrations, monitoring, and compliance commonly takes months.

Ongoing costs: With a custom build you incur model hosting, MLOps, logging/monitoring, incident response, and compliance overhead. Managed vendors absorb some of that responsibility but not all—you remain responsible for data governance and access controls.

Productivity upside: Independent research suggests generative AI can materially raise knowledge worker productivity in many tasks, strengthening the business case for automation where the gains compound across teams.

Aspect | Off-the-Shelf | Custom Build |

|---|---|---|

Time to Prototype | Days to weeks with managed APIs and platform tools | Months to build production-grade agent with secure integrations, monitoring, and compliance |

Ongoing Costs | Some costs absorbed by vendor, but you retain responsibility for data governance and controls | Responsible for model hosting, MLOps, monitoring, incident response, and compliance overhead |

Productivity Upside | Raises productivity in many tasks, compounding benefits for teams | Similar productivity gains possible, but depends on build quality, scope, and ongoing maintenance investment |

Responsible AI and Bias Mitigation (What You Must Add When You Build)

If you build, include these elements from day one:

Fairness and bias evaluations.

Human-in-loop controls and escalation paths (Microsoft’s responsible AI guidance is practical here).

Logging and traceability so outputs can be audited (NIST’s guidance applies).

These controls are frequently the reason organizations decide to build rather than rely on generic vendor defaults.

A Short Pilot Plan You Can Run in 6–12 Weeks

Define a single, measurable use case (e.g., triage incoming customer requests).

Create a minimal data pipeline and privacy review.

Implement a hybrid agent: vendor LLM + private retrieval + a small orchestrator.

Run safety and fairness evals using an evaluation suite.

Measure cycle time, error rate, and user satisfaction.

Decide to scale, iterate, or revert to an off-the-shelf integration based on pre-set thresholds.

Decision Checklist (One-Page Summary)

Regulatory barrier? → Build or private-host.

Unique intellectual property/workflow? → Build.

Quick proof needed and no special constraints? → Buy.

You have ML/MLOps talent? → Build makes more sense.

Need auditability/fairness beyond vendor defaults? → Build.

Want faster time-to-market with less infra overhead? → Buy.

The Next Steps for Your Project (A Practical Roadmap)

Run the 6–12 week pilot described above.

Use eval tooling to surface real-world risks before deployment.

Start hybrid if any data sensitivity or integration complexity exists.

If you build, design responsible AI controls, logging, and compliance into your CI/CD pipeline.

Reassess ROI and maintenance TCO after the pilot.

The Closing Play: Choose with Clarity

Making the build vs. buy decision comes down to three pragmatic questions you can answer quickly:

Does regulation or data sensitivity force end-to-end control?

Is the required integration or behavior unique enough to be a differentiator?

Do you have the people and appetite to run models in production?

If you answer “yes” to one or more, plan a careful custom or hybrid build with strong evaluation and responsible AI measures. If your need is standard, quick, and non-sensitive, an off-the-shelf platform will usually get you to value faster.

Want support for Custom AI Agents?

See our custom AI agent offering and reach out on demand.

Custom AI Agent Development:format(webp))

:format(webp))

:format(webp))

:format(webp))