Edge Functions vs Cloudflare Workers: a practical guide plus advanced edge patterns

This article lets you decide when to run logic at the edge, how the main platforms work, and which patterns will actually pay off in production. You’ll get a concise comparison of Vercel Edge Functions and Cloudflare Workers, platform primitives to rely on, deployment and bundling tips, plus five advanced, actionable patterns that most top results don’t cover (WebAssembly, hybrid DB topologies, real-time multi-user coordination, edge rate limiting, and dependency strategies). Every factual claim links to documentation or an authoritative writeup so you can follow up.

What Edge Functions and Cloudflare Workers are — the basics

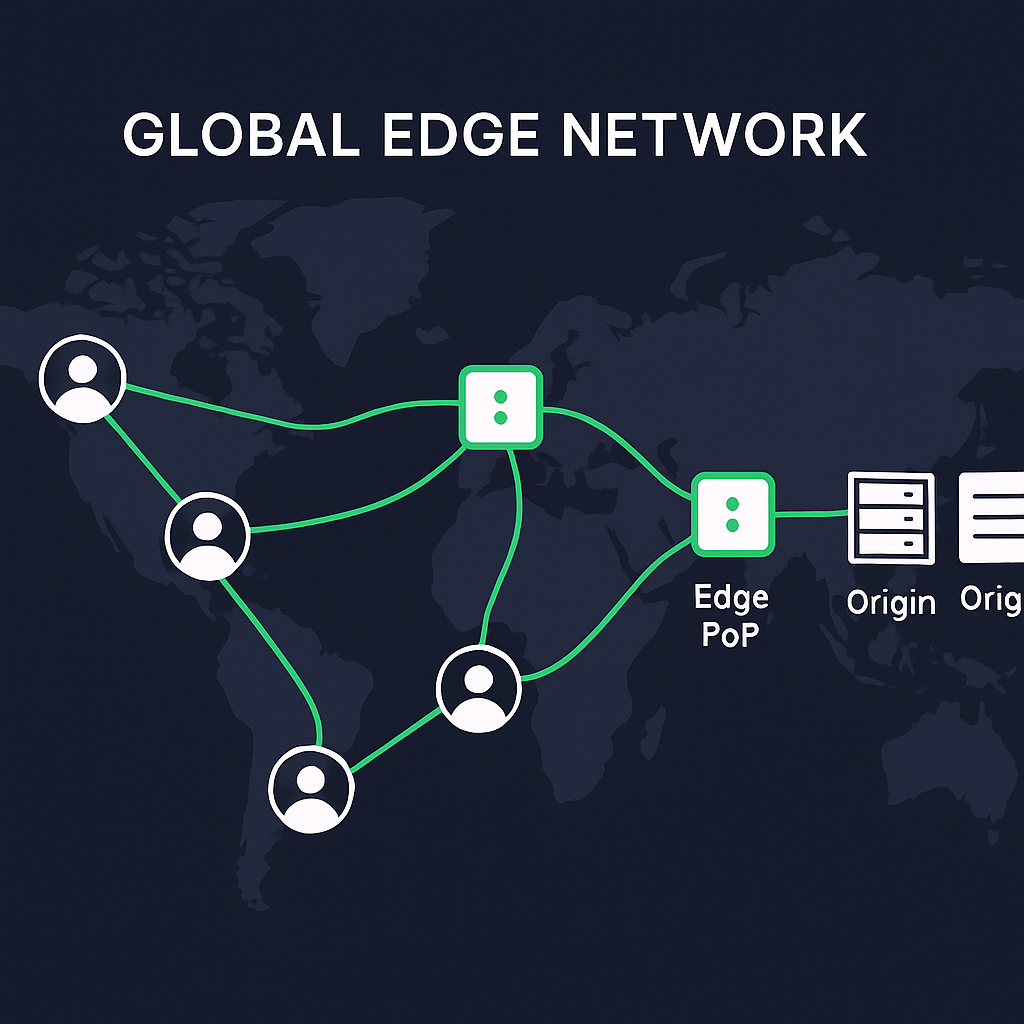

Edge Functions are serverless functions that run on geographically distributed points of presence (PoPs) close to your users to reduce latency and perform request-time logic such as routing, personalization, or caching controls. See Vercel’s Edge Functions documentation for core concepts and common use cases like A/B testing, redirects, and authentication. The general concept of processing data closer to users is known as edge computing , which helps minimize network hops and improve response times.

Cloudflare Workers are a similar serverless platform built on V8 isolates and the Service Worker API that runs JavaScript (and WebAssembly) across Cloudflare’s global network, designed for low latency and high concurrency; refer to the Cloudflare Workers documentation for an overview of its architecture, APIs, and core use cases.

How modern edge runtimes work

Edge runtimes run code inside lightweight V8 isolates (not full Node.js processes), giving fast startup and low memory overhead. You can explore the V8 JavaScript engine documentation to understand the underlying isolate model that powers both platforms. Because isolates avoid heavy process startup, edge platforms can offer near-zero cold starts and high concurrency for short, latency-sensitive handlers — Cloudflare’s serverless execution model and global deployment are covered in their Workers docs.

Vercel’s Edge Functions likewise run on a V8 runtime and integrate tightly with Next.js and other frameworks; see how they balance performance and API compatibility in the Vercel docs.

Common use cases where edge handlers shine

Edge handlers are ideal when you need to reduce roundtrips, modify requests or responses near users, or enforce lightweight security before hitting origin:

Personalization and geo-routing (serve region-specific content)

A/B testing and feature flags at request time

Authentication and redirects (fast auth checks)

API gateways, response transformation, and caching logic

For a practical comparison of when each platform makes sense for latency-sensitive JavaScript apps, check out freeCodeCamp’s JavaScript on the Edge comparison .

Platform building blocks to know

Cloudflare exposes several edge primitives you can use as building blocks:

Workers KV — globally distributed key–value store for eventual-consistent reads

Durable Objects — strongly consistent, single-writer objects for coordination or stateful endpoints

R2 — S3-compatible object storage at the edge

D1 — SQLite-compatible relational database for edge queries (in preview)

Primitive | Type | Description |

|---|---|---|

Workers KV | Key–value store | Globally distributed store for eventual-consistent reads |

Durable Objects | Stateful coordination | Strongly consistent single-writer objects for coordination or stateful endpoints |

R2 | Object storage | S3-compatible object storage at the edge |

D1 | Relational DB | SQLite-compatible relational database for edge queries (preview) |

Deployment and developer experience

Cloudflare Workers — develop, test, and deploy via the Wrangler CLI or web dashboard.

Vercel Edge Functions — deploy directly from the Vercel platform and integrate into Next.js routes or middleware.

Both platforms provide local development tooling and testing hooks, with debugging and emulation support to streamline your workflow.

Limits and best practices to respect

Edge runtimes trade full Node API availability for smaller, faster isolates. Typical constraints include execution time limits, binary size limits, and restricted Node globals or modules; both platforms document their runtime limits and recommended patterns. Common guidance:

Keep handler code small and synchronous-friendly (avoid heavy CPU work in JavaScript).

Avoid large polyfills and Node-only libraries; prefer edge-safe modules or compile to lighter bundles.

Five advanced edge patterns most guides skip

These patterns move you beyond simple routing and caching into architectural choices that materially change system behavior.

WebAssembly on the edge for CPU or security-sensitive tasks

Compile Rust, C, or Go to WebAssembly and call that module from your JS handler to perform CPU-heavy math, parsing, or cryptographic work near the user. See MDN’s WebAssembly documentation for details on security and performance considerations that make WASM a good fit for edge execution.

Use cases:

Image or document processing where latency matters

Deterministic parsing or sandboxed plugin code

Cryptographic verification handled outside your JS heap

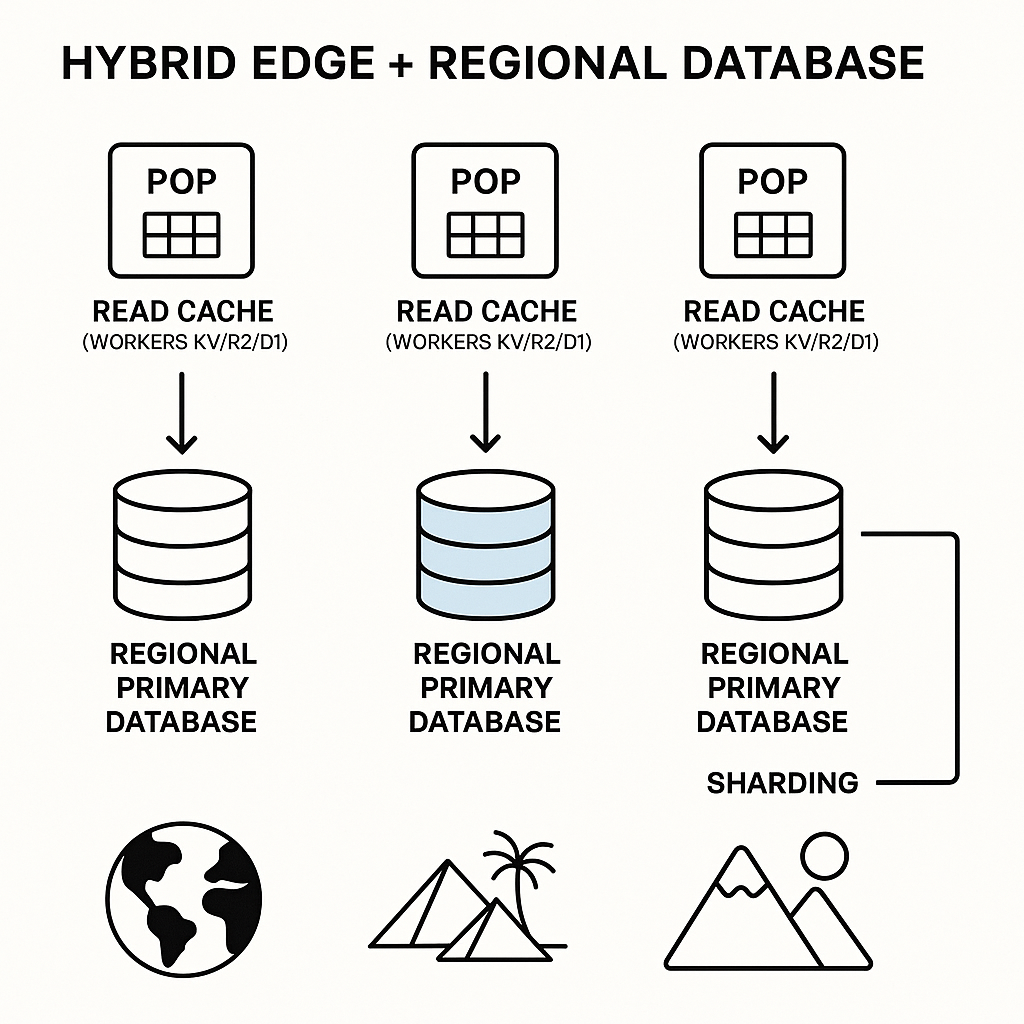

Hybrid edge + regional architecture for databases

A practical pattern is “read-mostly at the edge, write-through to regional backends.” Put cached reads or read-only sharding at PoPs (Workers KV, R2, or D1 for small relational queries) and forward writes to regional databases to preserve consistency and transactional semantics. For deeper insights on architecting edge applications, see LogRocket’s guide to edge application architecture .

Pattern options:

Read cache at the edge (KV/R2) + write to regional primary (HTTP API to region).

Read replica in region + edge cache invalidation on writes.

Geographic sharding: route writes to region owning that shard and read locally.

Latency-aware routing (choose the nearest region for writes when multi-region writes are supported) reduces tail latency and avoids unnecessary cross-continent hops.

Real-time, multi-user coordination at the edge

Durable Objects provide a single-writer, strongly consistent primitive to hold ephemeral state and coordinate multi-user sessions (presence, cursors, locks) without round-tripping to a central hub. Cloudflare’s Durable Objects offer example patterns for chat and collaborative features.

How to think about it:

Map a room/document ID to a Durable Object instance to localize coordination to a small set of PoPs.

Use WebSockets or WebRTC for transport, with a Durable Object holding authoritative state and short-lived sync.

For global scale, partition rooms by geography or shard IDs to keep most coordination local and accept eventual consistency between shards for non-critical state.

Edge-native rate limiting and abuse detection

Implement per-POP or per-user quotas at the edge to block abusive traffic before it reaches origin:

Use Workers KV or edge storage to maintain token-bucket counters with short TTLs for rate windows.

Use Durable Objects as single-writer counters for precise, consistent limits per key.

Combine edge checks with Cloudflare Rate Limiting rules for DDoS-level protection.

This keeps detection and throttling local, reduces origin load, and can feed scores (bot score, abuse risk) back to a central analytics pipeline asynchronously.

Advanced bundling and dependency strategies for edge runtimes

Because edge environments limit binary size and available APIs, adopt these tactics:

Split code into "edge" and "server" bundles so large Node modules stay in regionals while tiny handlers run at PoPs.

Use lightweight bundlers (esbuild/rollup) to minify and tree-shake.

Avoid polyfills for Node globals; prefer native Web APIs available in isolates.

Practical steps:

Audit dependencies with a bundler analyzer.

Replace heavy libs (e.g., full lodash) with targeted utilities or native code.

Keep hot paths tiny and offload non-latency logic to regional functions or background queues.

Observability, testing, and debugging

Monitoring edge functions requires capturing logs and traces at the PoP and correlating them with backend traces. Both Cloudflare and Vercel provide guidance for local testing, log capture, and structured observability integrations to help you identify and resolve issues quickly.

Choosing between Vercel Edge Functions and Cloudflare Workers

Choose Vercel Edge Functions when you want tight Next.js integration, an opinionated developer flow, and simple middleware-style handlers close to your static assets.

Choose Cloudflare Workers when you need a very mature global network, primitives like Durable Objects and R2, or explicit control over edge storage and coordination.

Feature | Vercel Edge Functions | Cloudflare Workers |

|---|---|---|

Integration | Next.js integration | Mature global network & primitives |

Deployment Flow | Opinionated developer flow | CLI & dashboard |

Storage Primitives | Edge Middleware | KV, Durable Objects, R2, D1 |

Latency | Close to static assets | Distributed global PoPs |

Use Cases | Middleware-style routing & personalization | Advanced storage, coordination, and custom edge logic |

Ready to ship — practical next steps

Pick a single, high-value edge use case (geo-routing, auth pre-check, image transform) and implement it as an Edge Function or Worker.

Measure end-to-end latency from multiple regions and compare to origin routing.

If you expect heavy CPU work, prototype a WASM module and benchmark it in an edge runtime.

For stateful workflows (chat, locks), experiment with Durable Objects to measure local coordination benefits.

If you want, tell me one concrete thing you want to run at the edge (auth check, image resize, chat room, rate limiter, etc.) and I’ll sketch a minimal architecture, required primitives, and a deployment checklist with links to the exact docs and example code.

:format(webp))

:format(webp))

:format(webp))

:format(webp))